Problem: All of a sudden my site has lost a lot of organic search traffic. Is there anyway to see in Google Analytics what may have caused this?

Solution: Use Google Analytics to make sure you don’t have duplicate content.

There’s a lot of talk these days about ridding your site of duplicate content. In the world of search engine optimization (SEO) it’s the new black. And for good reason. Depending on how widespread the issue is, duplicate content can wreak havoc on your site’s performance in the search engines. Even seemingly minor canonical issues — such as not having the non-www version of your site redirect to the www version (or vice versa) or having versions of the page with query parameters indexed — can be enough to hamstring your site. So the sooner you can spot it and run it the heck out of town, the sooner you can restore peace and order.

But how do you find these pesky dupes? Well, there are lots of different ways you can identify dupe content on your site. But I serendipitously stumbled across one report in Google Analytics that showed me at a glance not only the pages on the site that were duplicated but also the order of importance. How is that, you ask? Well, since Google Analytics orders your reports by default by pages viewed, there’s your pecking order right there, especially if you’re just looking at traffic coming from organic. (To learn how to segment your traffic, check out this video from Google’s Conversion University.)

How to Find Duplicate Content

The report you want to use is the Content by Title report (under Content). I always thought this was kind of a junk report because I prefer to evaluate pages by URI (the part of the URL after the domain). But it’s proved itself to be quite useful and a report I rely on heavily for this purpose.

The nice thing about this report is if you click on a page title and you see multiple line items on the report, that’s a flag. I recently had a client who had more than 20,000 versions of their homepage. <thud>

I’d show you examples of what it looks like in your reports with my usual screenshots, but it’s kind of impossible to demonstrate this one without potentially exposing clients. But start with your most highly trafficked pages and work your way down.

What to Do Once You Find Dupes

There are several options you can go with to either resolve duplicate content — or at least mitigate its effects.

301 redirect the dupes to the main page.

This is usually the best way to go. Not only will it resolve your duplicate content issues, it will consolidate your reports in Google Analytics. It’s a royal pain sometimes to try to evaluate the performance of a particular page when you have different versions of that page running rampant in your reports.

Add a rel canonical tag to the main page.

This tells the search engines, “Credit all the love to this page.” Don’t let the name scare you; it’s really easy to set up. Here is more information about canonical tags from Google’s Webmaster Central.

Add the robots noindex, follow meta tag to your dupes.

This tells the search engines, “Go ahead and follow these links, but don’t index this page.” It follows the convention: <meta name=”robots” content=”noindex, follow” /> Learn more about how to use the robots meta tag.

Add query parameters to both Bing and Google’s Webmaster Tools.

Both Google and Bing give you the option to tell them which query parameters you want them to ignore. Doing this will (at least in theory) remove them from their index. This isn’t my favorite option, but I’ve used it more than a handful of times in situations where a client’s IT team is (let’s just say) a little slow at the trigger.

I’ve definitely seen adding these reduce the number of pages indexed with query parameters but not eradicate them altogether. However, if you have a lot of pages with query parameters being indexed, this may help. (The best way to find out is to search for those query parameters using a site: command — e.g., sid= site:www.mysite.com.)

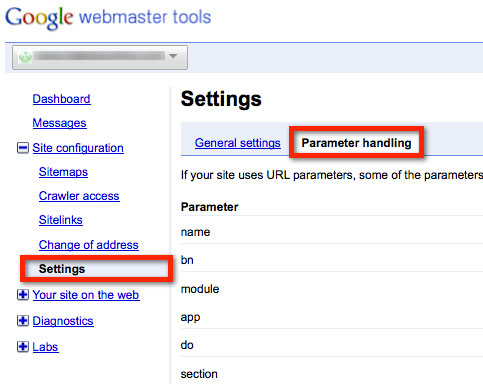

You can add these parameters in Google Webmaster Tools under Site Configuration > Settings > Parameter Handing, as seen below.

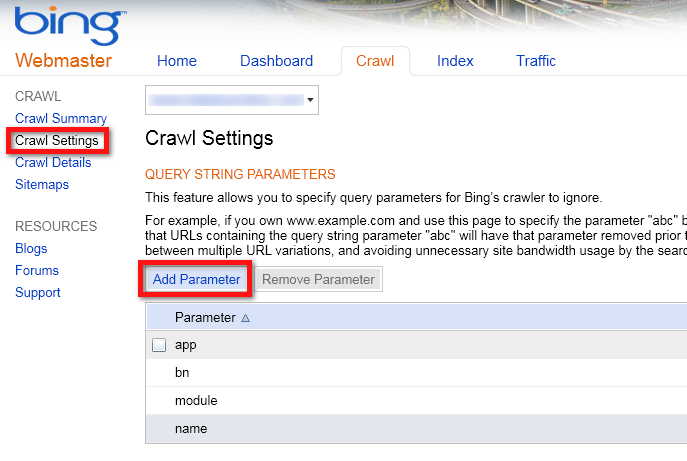

And you can add them in Bing Webmaster Tools under Crawl Settings > Query String Parameters, as seen below.

Click for larger image.

Google does the heavy lifting of actually suggesting query parameters it stumbles across. Bing doesn’t do that. So I’d start with Google and then add those parameters to Bing.

Winnowing these duplicates out of your site is about as much fun as any other kind of weeding, but it’s a necessary evil. I’ve seen sites dramatically turn around after bringing their duplicate content under control.

Thanks! I have been so frustrated by duplicated content issues; I know SEO Moz picks up a lot of them but I never even thought to use the Content section in Analytics…

Great article, but who do you get on when wordpress uses the same header and footer so therefore duplicating the content automatically…? My site at http://www.vpnipp.com is saying its got lots of duplicate content but most of it is the footer content, SEO content for title tags and also common keyword strings for SEO…kinda makes sense but defeats the purpose as well….

Jamie

Duplication in the header and footer may be a bad user experience, but it shouldn’t impact your SEO, unless I’m misunderstanding what you’re saying. Search engines are primarily looking at what’s in the body of a particular page. You should make sure your page titles are unique not only for the search engines (and users) but also to be able to differentiate between pages in Google Analytics.

I have actually been so disappointed by duplicated material concerns; I understand SEO Moz gets a great deal of them however I never ever even believed to utilize the Content area in Analytics.